· Chiradeep Vittal · blog · 6 min read

Your AI Coding Assistant is Living Groundhog Day

AI coding assistants are remarkably capable, but they wake up every session with complete amnesia. Here's why that matters for your engineering team and what it means for productivity.

Live, Die, Repeat

Remember Tom Cruise in Edge of Tomorrow? He dies, wakes up, and fights the same battle over and over, gradually getting better because he remembers everything from previous loops. Now imagine if he forgot everything after each death while Emily Blunt had to re-teach him the same combat moves every single day.

That’s your AI coding assistant.

Living with Anterograde Amnesia

(Yes, that’s a third movie reference)

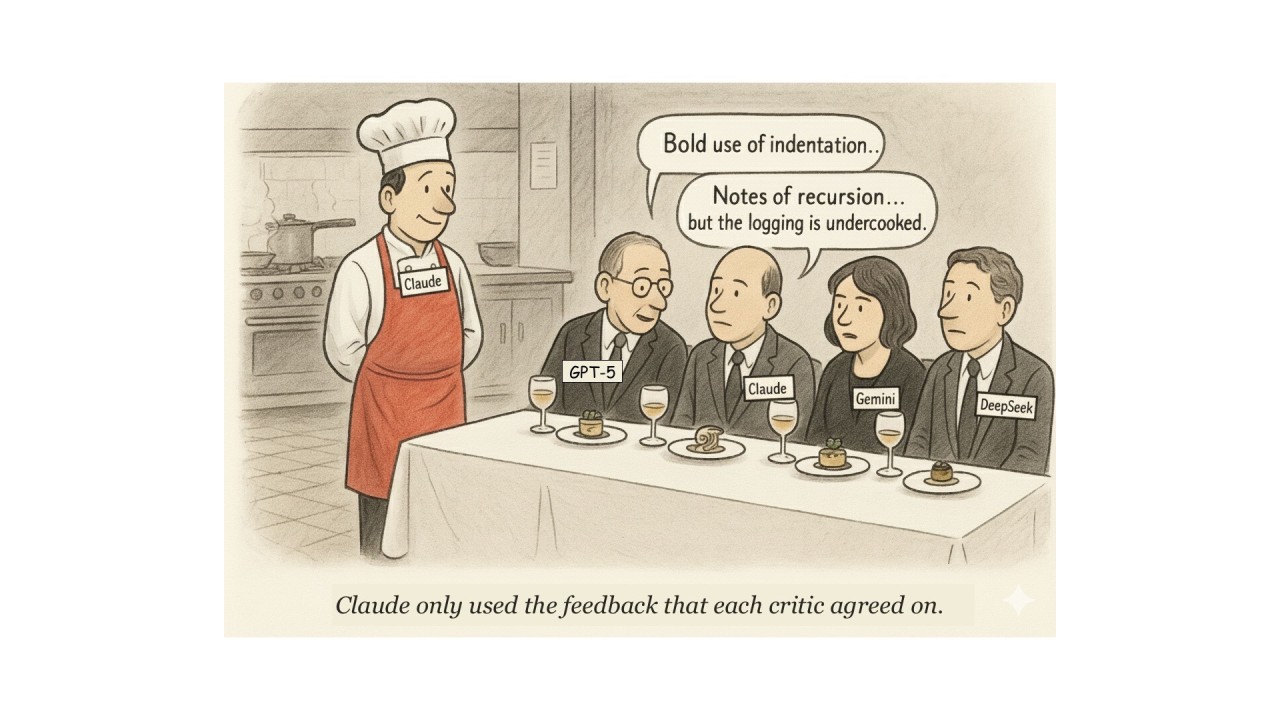

Every day, developers across the industry open new sessions with Claude, Cursor, or Codex to work on their codebases. And every day, these remarkably capable assistants wake up with complete amnesia about the previous sessions.

That payment processing module you carefully explained yesterday? Your AI assistant has no memory of it. The rate limit on your partner’s API that caused last month’s outage? Gone. The compliance requirement that means all financial data must be encrypted with a specific algorithm? Your AI assistant is hearing about it for the first time. Again.

We’ve built coding assistants with extraordinary abilities—they can write complex algorithms, refactor legacy code, and debug subtle issues—but they wake up every session in the middle of an ongoing battle they don’t remember joining.

The README Problem

But unlike Tom Cruise’s battlefield, software projects aren’t just about memorizing where enemies appear. Knowledge management researchers Nonaka and Takeuchi identified two types of knowledge in organizations:

Explicit knowledge: What’s written down—JIRA tickets, PRDs, onboarding guides, README, API specifications. Your AI assistant can read this (if you provide it).

Tacit knowledge: What experienced developers “just know”—that the authentication service has an unusual token refresh pattern because of a legacy mobile app, that special way user data is handled, that the seemingly redundant database index prevents a specific customer’s reports from timing out.

Studies show that up to 70% of organizational knowledge is tacit. In software teams, this includes:

- Why certain architectural decisions were made (and which alternatives failed)

- Which code is fragile and needs careful handling

- Security invariants like authorization rules, data validation constraints, and access control patterns

- Why security controls are inconsistently applied across the codebase

- Implicit security assumptions that should be explicitly enforced

- Unwritten team conventions and coding patterns

- Performance assumptions based on real customer usage

- Political dynamics around certain modules or features

Your AI assistant starts each session missing 70% of what your junior developer learned in their first month.

The Coordination Cascade

The challenge multiplies across teams. In Edge of Tomorrow, Tom Cruise eventually coordinates perfectly with his squad because he’s learned all their moves. But imagine multiple soldiers, each in their own time loop, unable to share memories.

That’s your organization using AI assistants:

- The developer’s AI assistant learns about API constraints, then forgets

- The QA engineer’s AI assistant rediscovers the same constraints while writing tests, then forgets

- The security team’s AI assistant identifies the same issues during threat modeling, then forgets

- The DevOps team’s AI assistant stumbles upon the same limitations during deployment planning, then forgets

Each team member becomes their own Emily Blunt, exhaustedly re-explaining context to an assistant that can’t remember yesterday’s battles. For non-developers, the situation is worse since they are locked out of explicit knowledge.

The Half-Life of Tacit Knowledge

Not all knowledge is created equal. Some tacit knowledge is critical and enduring—security invariants that must never be violated, performance thresholds that keep the system stable. Other knowledge has a shorter half-life—the workaround for a bug in version 3.2 of a dependency, the special deployment process for customer X who’s migrating next quarter.

The challenge deepens when you realize that even “explicit” knowledge often isn’t where AI assistants can reach it. Critical architectural decisions live in commit messages from three years ago. The reason for that unusual validation pattern? It’s in a code review comment thread. The security requirement that shaped the entire authentication system? Buried in an email chain between the security team and the CTO.

Meanwhile, tacit knowledge spreads through entirely human channels. Developers learn about the fragile parts of the codebase during debugging sessions at 2 AM. They discover unwritten rules in sprint planning meetings. They absorb security best practices during incident response. They pick up performance optimization tricks through pair programming. The break room conversation about why the previous architecture failed becomes tribal knowledge that shapes every future decision.

Your AI assistant has access to none of these transmission channels. It reads the code and the documentation you explicitly provide, missing the rich context that flows through every other communication pathway in your organization. And unlike a human developer who accumulates this knowledge over time, building an increasingly accurate mental model, the AI assistant’s understanding resets to zero with each new session—even as the underlying knowledge continues to evolve and decay.

Research on software teams shows they spend quite a bit of time sharing or waiting for context. With AI assistants, this overhead repeats indefinitely—a tax on productivity that compounds with each new session.

What This Means for Engineering Leaders

The good news: AI coding assistants are genuinely transformative when working on well-defined, context-light problems. They excel at algorithm implementation, bug fixing in isolated code, and generating boilerplate.

The reality check: For mature codebases with rich tacit knowledge, every session still starts at zero. Your team isn’t getting the compound benefits of an AI that learns your system over time—they’re getting powerful but perpetually naive assistance.

Every day there’s a new “system” expounded by a vibe-coding expert that claims to solve this problem. Unfortunately what works for them (usually a lone coder) doesn’t work for you. But we’re still in the early loops of this particular battle.

The Path Forward

Unlike Tom Cruise’s character, we can’t solve this through repetition alone. The solution requires deliberately capturing and preserving context across sessions—turning tacit knowledge into explicit knowledge that AI assistants can consume.

Until then, your AI coding assistant remains a brilliant soldier with perfect combat skills who wakes up every morning having forgotten the war. And your senior engineers remain Emily Blunt, patiently explaining, again, why the safety needs to be clicked twice.

AI assistants deliver tremendous value despite starting from zero every session. But that ‘despite’ is doing a lot of heavy lifting. The question is: How many times are you willing to teach them the same battle?

What strategies has your team developed for managing context with AI coding assistants? Share your experiences and favorite ‘time loop’ movies in the comments.