· Pratik Roychowdhury · blog · 5 min read

5 Ingredients That Make an AI Agent Truly Successful (and Why "95% Fail")

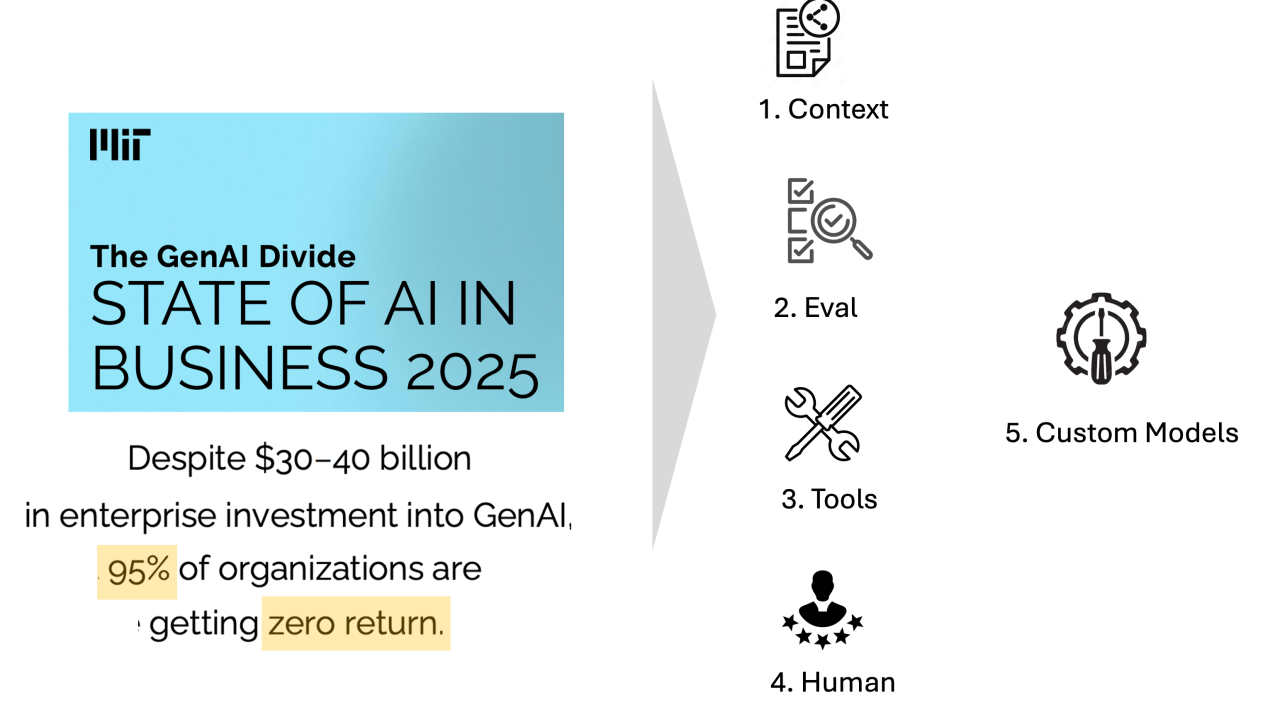

The State of AI in Business 2025 report reveals that 95% of generative AI pilots fail to deliver measurable business value. Here's what separates the successful 5% from the rest.

The State of AI in Business 2025 report has sparked numerous discussions across the tech industry. The report says that 95% of generative AI pilots fail to deliver measurable business value. Only 5% are showing real P&L impact.

That statistic is staggering, and it explains why so many executives remain skeptical of AI agents. Quite often, these are flashy demos that collapse when exposed to messy, real-world conditions. In fact, in a previous blog, I shared a similar experience we had. Getting an AI agent to ~70% accuracy was relatively straightforward, but pushing beyond that plateau required a much deeper rethink and effort.

But here’s the thing:

Foundational models already have tremendous reasoning power, and that power is only going to increase. Not leveraging that power because of a “we don’t want to be just another GPT wrapper” mentality is a fallacy.

Frontier model providers are pouring billions of dollars into training models on data and infrastructure that most companies will never have access to.

So leverage all that investment!

The problem isn’t the models. The real problem is whether we’re equipping agents with the right scaffolding to succeed.

And this is where most organizations go wrong. They either ship a lightweight LLM wrapper -or- worse, rush to build a custom or fine-tuned model (step 5) without nailing the fundamentals (steps 1 - 4). The result? They join the 95% that fail.

Here’s what the path to agent success really looks like:

1. Context

Without context, even the smartest agent is left to guess. But providing unnecessarily huge amount of context is another fallacy, because overwhelming an agent with more context than it needs, confuses it and makes it underperform. The art lies in providing the right and relevant context for the LLM to perform effectively.

- A customer support agent without product manuals defaults to generic ChatGPT-like responses. With manuals, troubleshooting guides, and support ticket history, its answers become specific and useful.

- A product security agent writing security requirements needs JIRA epics, PRDs, architecture docs, and other organizational context. Without them, it will misunderstand trust boundaries and its recommendations will not align with actual product risks.

2. Evals & Metrics

One of the biggest hurdles that agents need to cross is the problem of accuracy. And one of the biggest reasons for that is the lack of metrics and proper evals. Defining the task-specific metrics is the first step, and then measuring the performance of the agent against those metrics becomes extremely critical.

- In code review, the eval isn’t “does this sound right?” I’ve heard this phrase “Eyeballing is not evaluation.” It’s: “how often did AI flag the same issues a senior engineer would?”

- In threat modeling, an AppSec engineer might review an agent’s STRIDE analysis and highlight the risks it consistently misses.

The State of AI in Business 2025 report emphasizes that lack of clear metrics is one of the top reasons pilots stall out. Without metrics and evals, AI remains a lab experiment instead of a business driver.

3. Tool Calling (including MCP servers)

Reasoning is the brain. Tools are the hands. The agent requires appropriate access to the tools (including MCP, i.e. Model Context Protocol, servers) to perform the necessary actions during an agent run.

- A financial agent without trading APIs can only make suggestions. With APIs, it rebalances portfolios in real time.

- A security audit agent paired with scanners, log pipelines, and an MCP server can orchestrate scans, fetch logs, and retry gracefully when things fail.

Salesforce has noted that the challenge isn’t making agents sound intelligent - it’s making sure they can act reliably with tools and orchestration.

4. Guidance and Oversight from Domain Experts (or Human in the loop)

This is another area where a lot of AI agents fail. AI agents are fast, but not infallible. This is an absolute must because:

(1) Human expertise keeps them grounded and trustworthy.

- For example, in healthcare, doctors reviewing AI-generated patient notes ensure compliance and accuracy.

- In product security, a pen-tester spotting that an agent is stuck brute-forcing passwords can redirect it to a more fruitful path, like checking for exposed S3 buckets.

(2) In reality, many agents cannot operate in a single-shot. In order for them to operate, they need to interact with a human to guide them towards success. As the agents start learning from these inputs, it can start operating in a more autonomous manner.

Expert oversight in the form of human-in-the-loop isn’t optional - it’s essential, today.

5. Custom, Fine-Tuned, and Distilled Models

This should be the last optional step - not the first, nor required. Why?

Firstly, fine-tuning or creating custom models is time-consuming. Secondly, frontier models evolve faster than any single enterprise can keep up with. They’ll likely spend heavily to replicate capabilities that frontier model providers are already improving at scale with billions of dollars of investment. And finally, many of the frontier models have access to data sources (through partnerships etc.) that you will never have.

Still, custom models have their place:

- Fine-tuned models adapt to your workflows and terminology.

- Distilled models make specialized knowledge faster and cheaper.

- Custom models shine in high-stakes, domain-specific cases where you control data that frontier models will never have access to.

The report warns that companies rushing into custom models without mastering steps 1-4 often fail fast. Gartner predicts that over 40% of agentic AI projects will be scrapped by 2027 due to cost overruns and misalignment with business value.

Putting It All Together

The skepticism around AI agents is justified, but not because the models lack intelligence. It’s because most implementations skip the fundamentals.

- Context makes agents relevant.

- Evals make them accountable.

- Tools make them capable.

- Expert guidance makes them trustworthy.

- (Optional) Custom models make them precise.

The lesson is simple: start with steps 1-4, then optionally adopt step 5.

If an organization does this their AI agents won’t be another flashy demo. They’ll become dependable teammates that deliver measurable business impact.