· Chiradeep Vittal · blog · 5 min read

Can LLMs Review Code Effectively? - Notes from the field

As LLMs generate more code, using them for code reviews seems natural. But are they ready? A look at the latest research and practical experience reveals both promise and limitations.

No software goes live without someone reviewing it (right? right!?). So as LLMs generate more code, I’ve been using them to do reviews too — and it turns out, they’re not bad. But not great either.

If you’ve worked with these models, you’ve probably seen both ends of the spectrum. Sometimes they impress — pointing out edge cases or suggesting better naming. Other times they hallucinate or confidently miss the point. I’ve been digging into the latest research, experimenting in my own workflow, and talking to teams trying to make AI review a standard part of their process. And while there’s no universal answer yet, the signal is getting clearer.

What the Research Says

A recent benchmark called SWR-Bench (https://arxiv.org/html/2509.01494v1) tested the latest models — GPT-4o, Claude Opus 4, Gemini 2.5, and a number of open source models — on real GitHub pull requests. The results were sobering. Even the best-performing combination achieved only a 19% F1 score, which researchers deemed insufficient for practical deployment. The F1 score balances catching real issues (recall) with avoiding false alarms (precision)—a 19% score means the tools miss most problems while still generating too much noise! The main culprit wasn’t just missing complex issues—it was generating too many false positives. LLMs flagged numerous non-issues, which would overwhelm developers with noise.

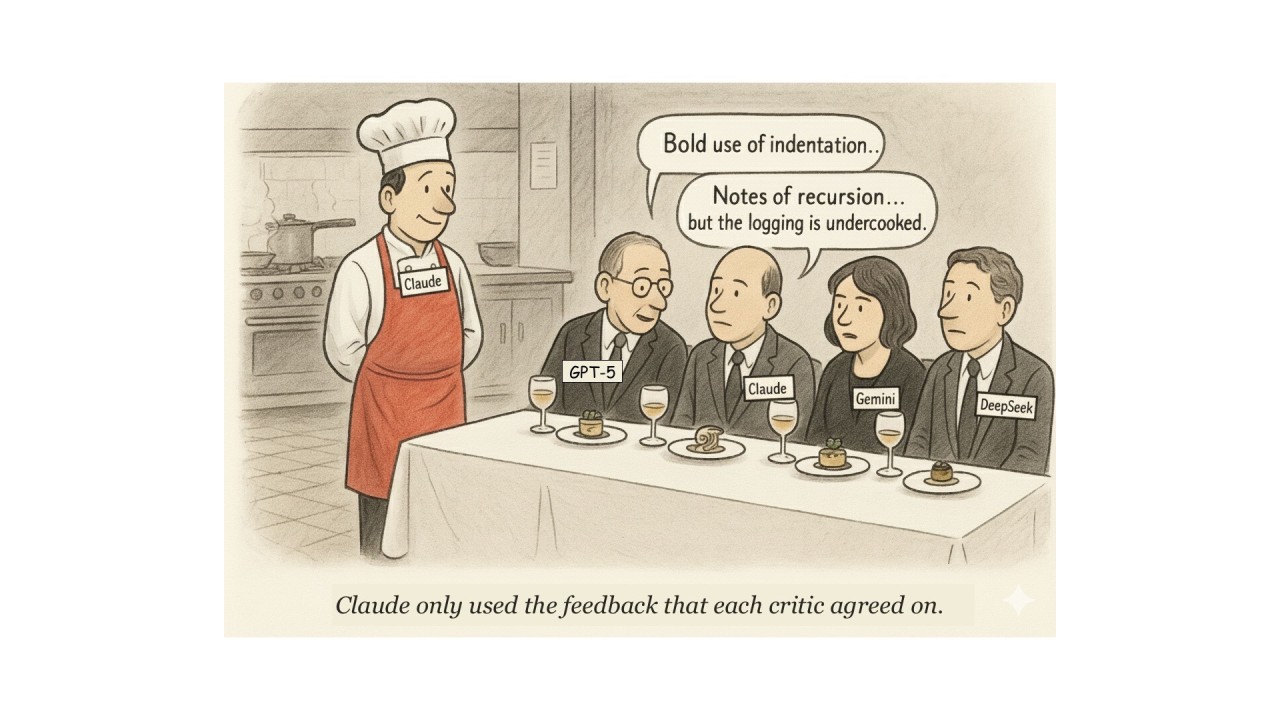

The models did show some interesting patterns: they performed better on functional issues like logic errors (24% F1) compared to code style and documentation improvements (15% F1). Interestingly, the benchmark found that running multiple review passes with the same model improved coverage. Think of it like getting three junior engineers to look at a PR and then summarizing the overlap. That tracks with what I’ve seen in practice too.

What’s Worked for Me

In my experience, context matters more than model choice. One thing that’s made a noticeable difference: giving the review agent access to the PRD or design spec. When the model understands why the change is being made, the quality of the comments improves quite a bit. It starts calibrating questions to the requirements: “Should this also update the authorization rules?” or “The API should validate the input string against these categories.” In the same vein, adding lots of detail to the PR description also helps a lot (this was discussed by the SWR-Bench authors as well).

Another thing that helps: asking the model to adopt a specific persona. My favorite? A hacker. When I prompt the model with “Review this code like a malicious actor looking for ways to exploit it,” I get very different — and often very useful — feedback. It starts paying attention to input validation, boundary conditions, and trust assumptions. You may not always want that perspective in every review, but it’s a great tool to have in the belt.

The researchers behind SWR-Bench also found that the review quality varied between code review tools: some tools had intricate prompt engineering and context engineering that produced superior results.

What Real Teams Are Doing

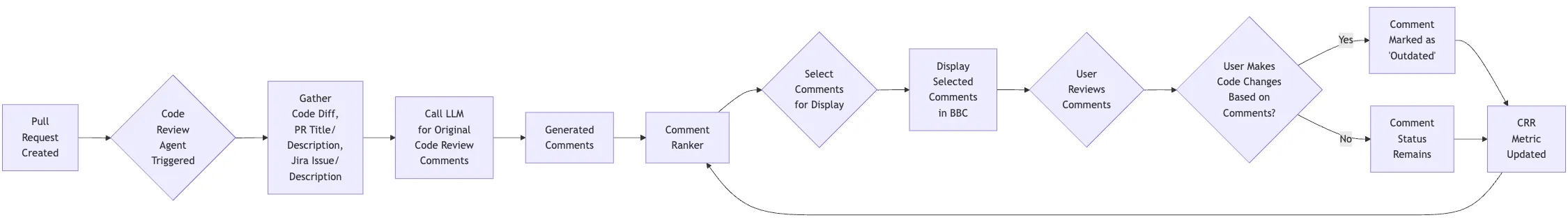

The team at Atlassian took a more structured approach. Their ML engineers built a ML classifier called CommentRanker to filter and surface LLM-generated suggestions in Bitbucket. Their internal study found what many of us have felt: AI comments can be helpful — but developers are quick to distrust anything that feels off. What worked for them was letting the LLM suggest, filter it through CommentRanker, and then giving humans the final say. The value wasn’t in replacing reviewers, but in accelerating the repetitive parts and letting humans focus on judgment and nuance. That framing — acceleration, not automation — is becoming a theme.

(from: http://www.atlassian.com/blog/atlassian-engineering/ml-classifier-improving-quality)

What Should You Do?

If you’re experimenting with LLMs for code review, here’s what I’d suggest — based on both research and hands-on work:

- Don’t use LLMs in isolation. Pair them with context: PR descriptions, related files, even Slack threads if you can.

- Try multiple runs. I often generate 2-3 review passes and skim for patterns. The union is usually more useful than any single one.

- Give them a point of view. Ask for feedback from a hacker’s perspective, or a code quality advocate, or a security reviewer.

- Measure the right things. Ask your team whether it actually saves them time or improves consistency.

- Don’t overpromise. Trust builds slowly. A bad review from an LLM can erode confidence quickly.

Final Thoughts

For me, the real question isn’t “Can an LLM review code?” It’s: What parts of the review process can we safely offload — and where does human attention still matter most?

LLMs can absolutely help. They can reduce the mental load of reviews, surface patterns, and provide another layer of quality control. But they need to be pointed in the right direction, given enough context, and used with judgment. If you’re leading a team, now’s a good time to experiment — and to figure out what kind of reviewer you want your AI to be. Because like any teammate, how you onboard them makes all the difference.