· Chiradeep Vittal · blog · 4 min read

Vibe Coding Is for Everyone, But Can Everyone Secure

Traditional security approaches—penetration testing, code reviews, compliance frameworks—assume human-written code and expert oversight. They're poorly suited to the scale and nature of vibe-coded applications. We need an approach as AI-native as the development process itself.

Vibe Coding Is for Everyone, But Can Everyone Secure?

Do you remember when AWS made everyone a “systems administrator”? Suddenly, any developer could spin up servers, databases, and storage without waiting for ops teams. But this democratization came with a cost—countless open S3 buckets, exposed admin consoles, and misconfigured IAM policies led to massive breaches at companies like Capital One, Equifax, and Accenture. Most developers could provision infrastructure but didn’t understand the security implications of default settings.

Today’s vibe coding movement promises an even bigger shift. Platforms like lovable.dev and bolt.new enable non-programmers to build functional micro-SaaS applications in days by simply describing what they want to AI. But like the emergence of the cloud era, this accessibility creates serious security problems.

The Security Knowledge Gap

For decades, software development has been organized around specialized teams—developers write code, security experts find vulnerabilities, operations teams manage deployment. Vibe coding breaks this model entirely. When a marketing manager can “code” a customer feedback app by chatting with an AI, who’s thinking about SQL injection prevention?

The security expertise that once lived in dedicated teams hasn’t magically transferred to every vibe coder. We’re trusting AI systems to embed decades of security knowledge into code generated for people who may not even recognize a vulnerability when they see one.

When Vibes Meet Reality

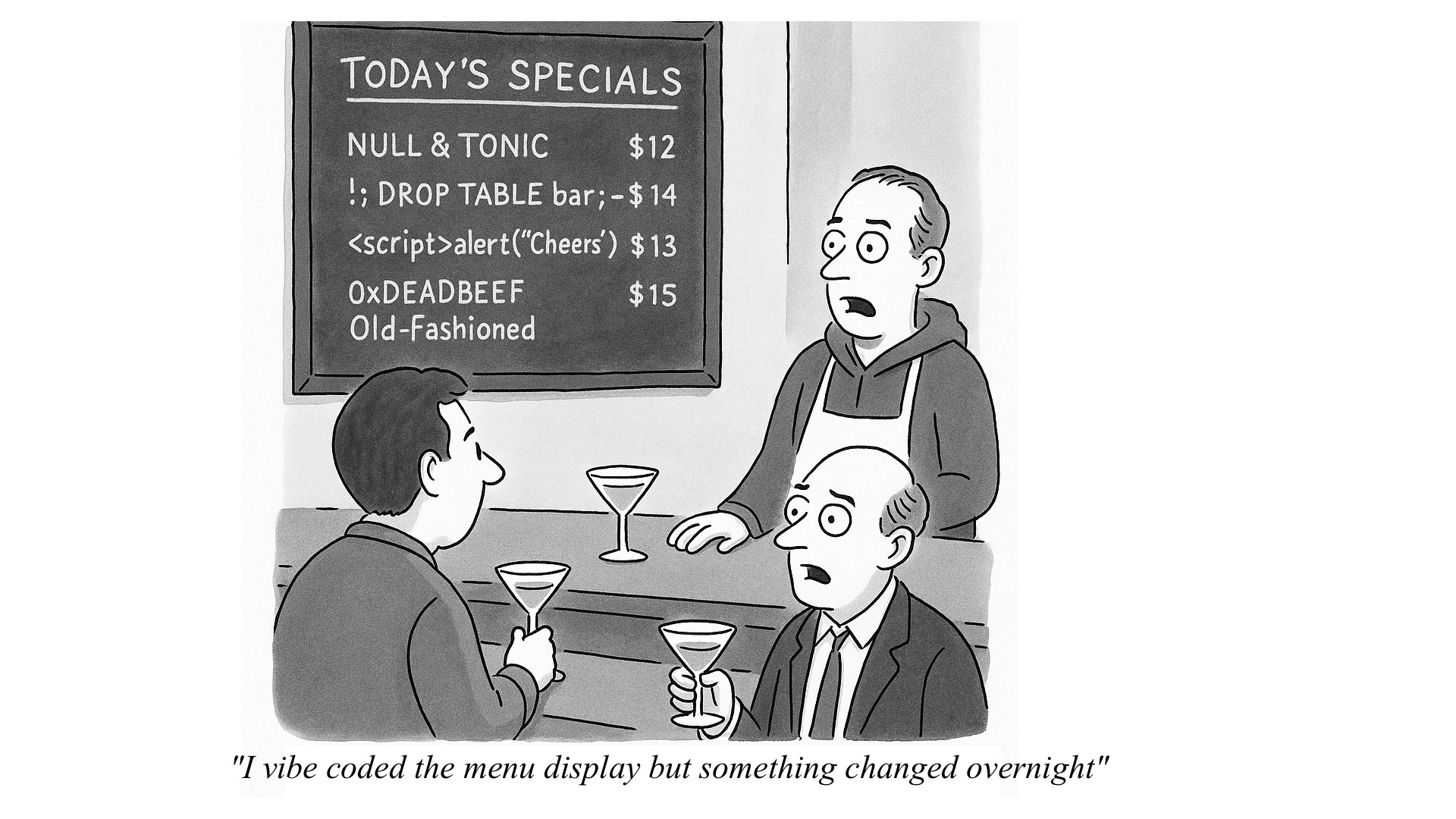

Just last month, Leonel Acevedo proudly announced his SaaS product Enrichlead was “built with Cursor, zero hand written code.” Two days later: “guys, i’m under attack …random things are happening.” His AI-generated code had hardcoded API keys in the codebase where users could access them. His subscription system was bypassed, API keys maxed out, database corrupted.

This has echoes of the early cloud issues. Leo could describe what he wanted, the AI could build it, but the critical security knowledge to prevent basic vulnerabilities was missing. As one observer noted: “there’s a growing and dangerous fantasy that technical knowledge is optional in the new AI-powered world.”

The Scale of the Problem

The problem goes beyond individual incidents. Platforms are incentivized to prioritize ease of use over security, while creators lack both knowledge to demand better security and expertise to implement it. Security researchers demonstrated platforms like Lovable could create convincing phishing pages with minimal guardrails—earning a “VibeScamming” score of 1.8 out of 10.

We’re democratizing software creation without democratizing security expertise. It’s like giving everyone industrial equipment without safety training.

An AI-Native Security Solution

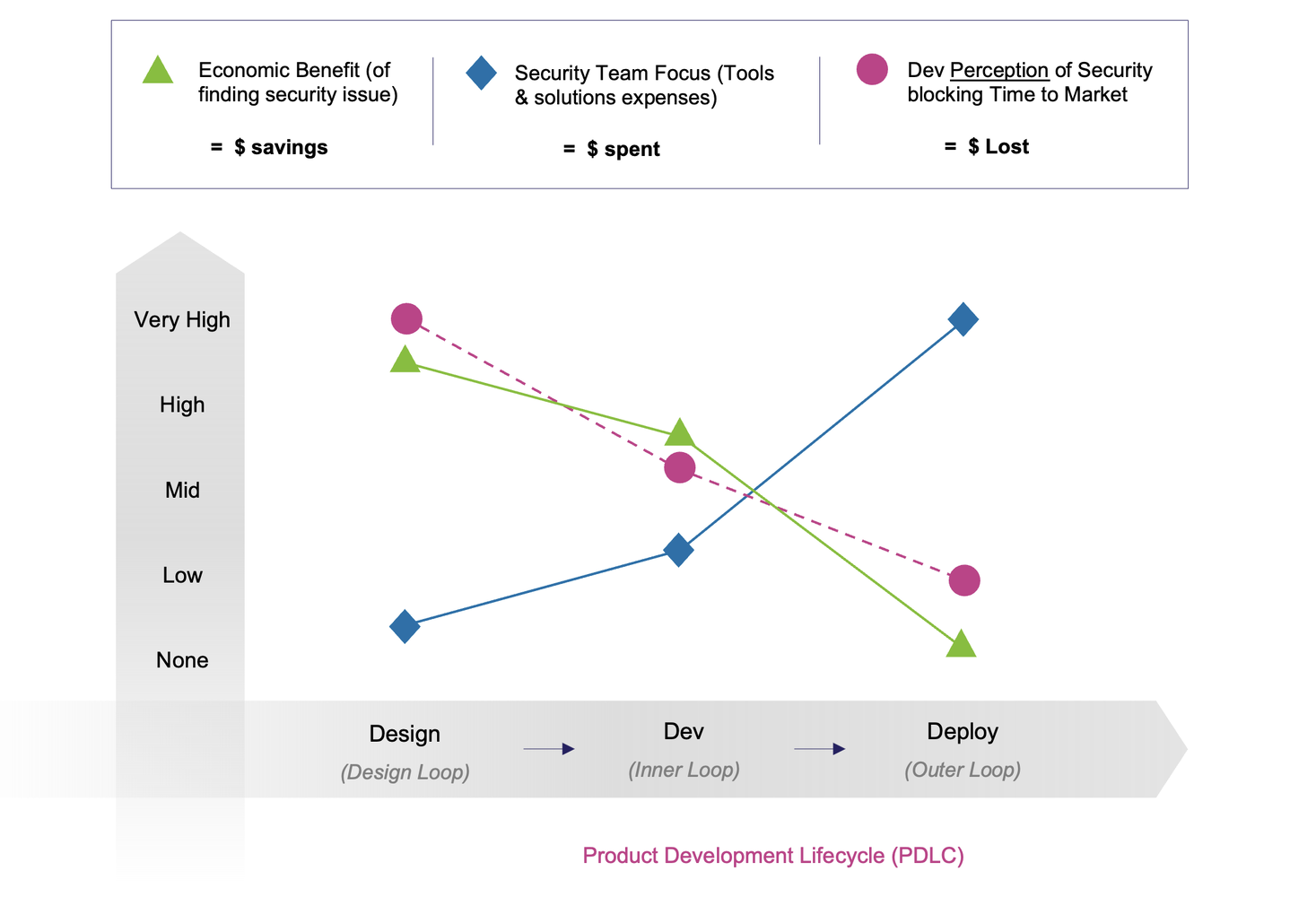

Traditional security approaches—penetration testing, code reviews, compliance frameworks—assume human-written code and expert oversight. They’re poorly suited to the scale and nature of vibe-coded applications. We need an approach as AI-native as the development process itself.

1. Proactive AI Security

Instead of bolting security onto finished applications, AI systems should analyze designs in real-time, identifying architectural vulnerabilities before implementation. Think of it as a security-aware pair programming partner that understands both technical risks and business context. When you describe user authentication, it automatically implements OAuth with proper session management instead of basic passwords.

2. Continuous Intelligence

Traditional CI/CD pipelines evolved for human developers who understand what they deploy. Vibe coding requires continuous AI analysis that can process the entire lifecycle of AI-generated code. As an example, Static Application Security Testing (SAST) tools that understand the patterns and weaknesses specific to AI-generated code.

3. Autonomous Penetration Testing & Red Teaming

When thousands of micro-SaaS applications are created daily, manual security testing becomes impossible. AI-driven testing systems that can simulate sophisticated attacks across entire platforms represent the only scalable solution.

Security as Competitive Advantage

The platform that becomes the secure choice for vibe coding will capture risk-sensitive markets: enterprise internal tools, regulated industry applications, financial technology solutions. Rather than viewing security as a barrier to growth, smart platforms will make security as invisible and automated as the coding process itself.

The future of vibe coding isn’t about choosing between accessibility and security—it’s about making security invisible, handled automatically by AI systems that understand both what you’re building and how to protect it. As barriers to entry fall, the guardrails often do too—unless security is embedded by default. The platforms that solve this first will define what comes next in software development.