· Chiradeep Vittal · blog · 7 min read

Practical Advice to Secure Your Vibe-Coded App

Building with AI doesn't mean abandoning security—it means rethinking how you approach it.

Practical Advice to Secure Your Vibe-Coded App

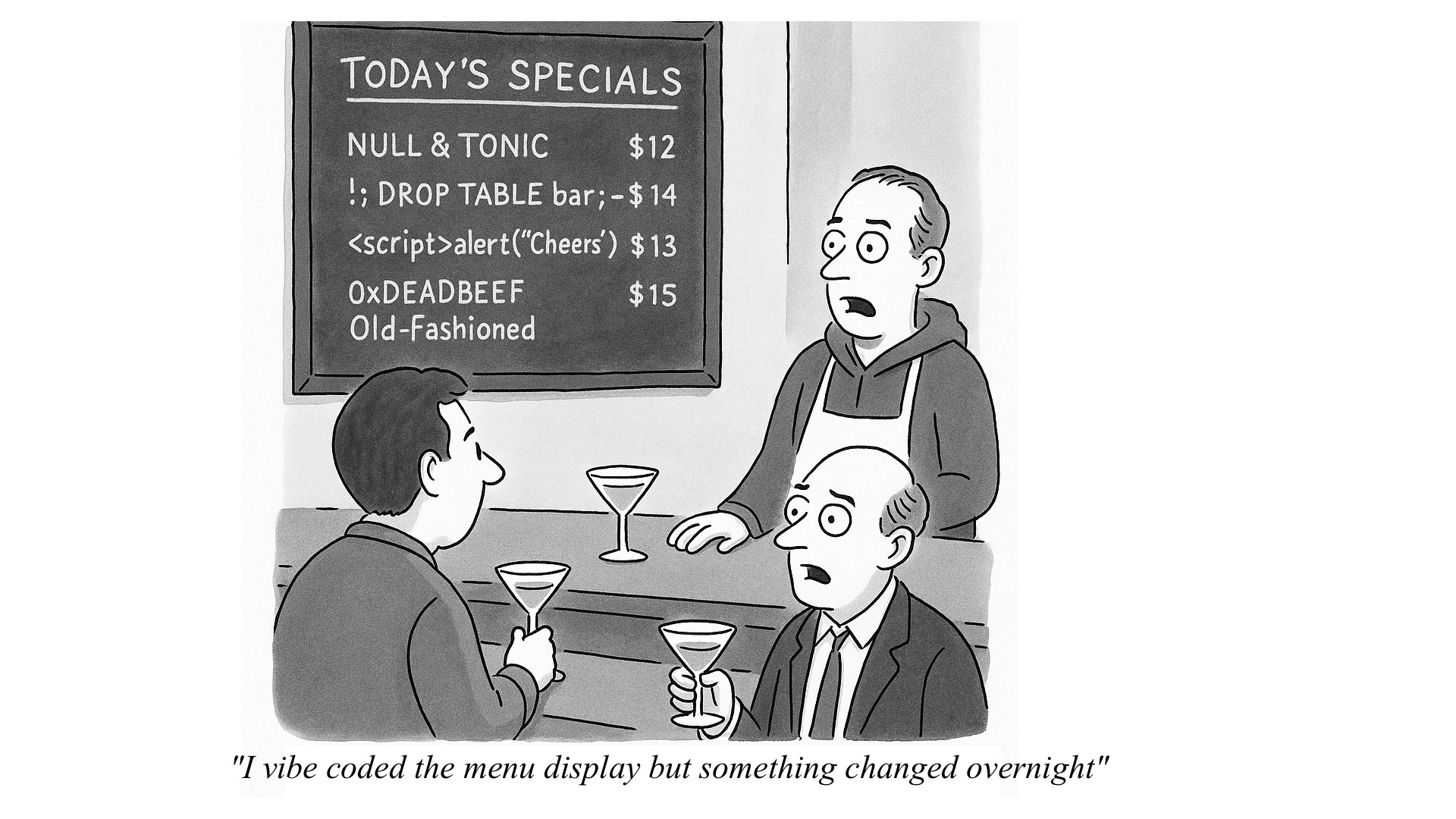

In a previous blog, we highlighted the security perils and pitfalls of using AI-assisted coding to build applications. But building with AI doesn’t mean abandoning security—it means rethinking how you approach it. You don’t need to become a security expert overnight, but you do need to make security part of your conversation with AI from day one. Whether you are truly vibe-coding (letting the agent do 100% of the code), or using it as a coding sidekick, here are some tips to secure your app across design, implementation, and deployment without slowing down your momentum. Note: we focus on Cursor’s AI agents in this post, but the ideas can be implemented in most agents.

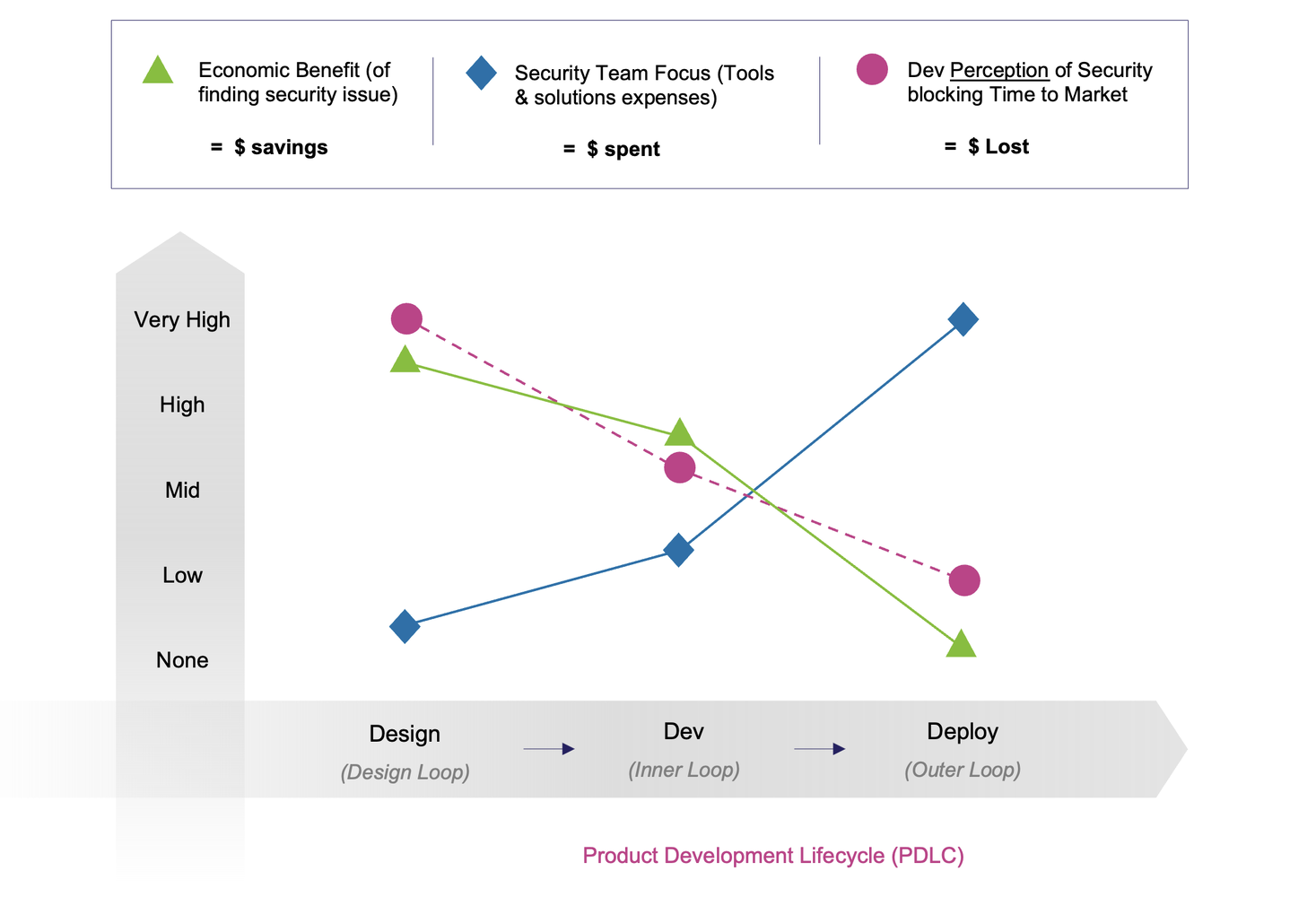

Design Phase: Start with Security in Mind

AI excels at implementing security patterns when given clear direction. Before writing code, it is a good practice to come up with a design document (with AI’s assistance). This document should emphasize security principles. Here’s a potential prompt for Claude or ChatGPT to generate a design document to start with.

Example Design Prompt:

I'm building a feature that [your use case].

## Flow 1

## Flow 2

...

## Secure by design

You will follow the best practices in the OWASP Application Security Verification Standard (ASVS) level 2. Create a list of assets to be protected and the possible security threats against them. Design mitigations for each threat.

## Security testing

1. Write security validations that validate ASVS practices

2. Write security validations that test the mitigations

Generate a design document suitable for an AI agent to implement this feature.This approach leverages AI’s knowledge of security standards while keeping the conversation focused on your specific needs. The reference to ASVS (Application Security Verification Standard) helps ensure your requirements align with established best practices. By emphasizing testing, the AI will keep testability and security validation in context when designing and implementing the feature.

Implementation: Embed Security in Your AI Tools

Your AI coding assistants can serve as continuous security advisors, but only if you configure them properly. Most modern IDEs and platforms support custom rules that shape how AI generates code. You should include the design document that you generated and included security principles as a custom rule or ensure that it is included in every code generation context. In addition you can provide detailed security instructions. An example rule for Cursor is included (note: this is not comprehensive and kept shorter for brevity)

Cursor Rules Example

Create a .cursor/rules/security_rules.mdc file in your project root:

---

description:

globs:

alwaysApply: true

---

You will attempt to adhere to the ASVS 4.0 Level 2 standards for authentication, JWT token management, validation, santization, encoding and injection prevention, deserialization prevention, etc. Follow good security practices such as Defense-in-Depth, Principle of Least Privilege and log all security related actions.

Especially look for business logic flaws that can be used by an attacker.

1. Authentication and Authorization:

- Use strong password hashing (bcrypt/Argon2)

- Implement proper session management

- Use JWT with appropriate expiration

- Implement role-based access control (RBAC)

- Use secure password reset flows

- Follow principle of Least Privilege

2. API Security:

- Use HTTPS for all communications

- Implement rate limiting

- Validate and sanitize all inputs

- Use proper CORS policies

- Use secure headers (HSTS, CSP, etc.)

3. Data Protection:

- Encrypt sensitive data at rest

- Use secure key management

- Implement proper data sanitization

- Use parameterized queries

- Implement proper data access controls

- Use secure file handling

- Implement data retention policies

4. Security Headers:

- Set appropriate security headers

- Use Content Security Policy

- Implement XSS protection

- Use HSTS

- Set secure cookie flags

- Implement frame protection

- Use proper CORS settings

5. Secure Configuration:

- Use environment variables for secrets

- Implement secure configuration management

- Use different configs for environments

- Use secure defaults

- Implement proper error handling

6. Error Handling:

- Don't expose sensitive information

- Use proper error messages

- Implement secure logging

- Handle errors gracefully

- Use proper status codes

7. Security Testing:

- Implement security tests

- Test authentication flows

- Test authorization rules

- Test input validation

- Test error handlingFor online platforms like Lovable.dev, use their knowledge.md file.

Code Review: Turn AI into Your Security Auditor

After the AI agent generates the code, prompt the AI to review changes through a security lens:

Security Review Prompt (Cursor example):

Please review the last changeset as a security auditor. Use the rules in @security_rules.mdc to guide your review.This approach transforms the agent from a code generator into an active security reviewer, catching issues before they reach production. For even more assurance, you can even use a different AI to review the code; for example, if you chose Claude as your coding agent in Cursor, you can use Gemini to review the code from a security perspective.

Automated Security Scanning

Integrate security tools directly into your development workflow using security scanners through MCP (Model Context Protocol) servers. For example, you can run static analysis using Semgrep’s MCP server that Cursor can integrate with. Here is the MCP server configuration for Cursor.

{

"mcpServers": {

"semgrep": {

"command": "uvx",

"args": ["semgrep-mcp"]

}}}Now you can prompt Cursor: “Run Semgrep on the authentication module and explain any findings.” The agent will execute the scan and provide context-aware explanations of security issues. You can even add a Cursor rule “Run Semgrep after every generation” to automatically run semgrep continuously.

Deployment and Continuous Security

Security doesn’t stop at code deployment. Even securely coded applications can become vulnerable from misconfigured infrastructure or inadequately secured infrastructure. Additionally, the AI may have used libraries that were already vulnerable or have new vulnerabilities that have not been mitigated. Product security teams manage several tools (software supply chain, static analysis, dynamic application testing, cloud security posture management) to manage these cross-cutting issues. If you have access to these tools, and they happen to have MCP servers, you can use AI agents to automate some of the testing. For example, Appaxon’s red-teaming agent can be run as an MCP server.

Stack-Specific Customization

Security requirements vary significantly based on your technology stack. A Next.js app deployed on Vercel has different security considerations than a React app with FastAPI backend on AWS. The sample prompts and rules may not be the most optimal for your scenario and will need to be tweaked. Here, once again you can use the AI (Claude is excellent) to help you customize the rules for your stack. For example:

The following is a set of generic security rules. Customize and enhance it for my stack that consists of:

- React 18 /Vite / Tailwind / Shadcn for the frontend

- FastAPI / SQLAlchemy / Pydantic / Postgres 16 for the backend

- The following AWS components:

- AKS for compute

- EFS for storage

- S3 for file storage

- AWS API GW for ingress

- AWS Aurora RDS (Postgres)

- AWS Secrets Manager

<original rules here>The Bottom Line

You can secure vibe-coded / AI-generated applications by just asking the AI to be your security partner. By establishing security requirements upfront, configuring your tools with security-first rules, and using AI for continuous security review, you can build robust applications without getting overwhelmed. If this guidance still fazes you, don’t hesitate to reach out to a security expert to help you craft your security strategy.

Remember: these approaches provide a strong foundation, but security is an ongoing process. Regular updates to your security rules, staying informed about new threats, and periodic professional security reviews will keep your application resilient as it evolves.

Need Help Securing Your Agent-assisted Code?

Every tech stack and use case has unique security considerations. If you’re building something mission-critical or handling sensitive data, we’d be happy to help you develop tailored security practices for your specific AI-assisted coding setup. Sometimes a quick conversation can save weeks of potential headaches down the road.

Get in touch if you’d like personalized guidance on securing your AI-powered development process.