· Chiradeep Vittal · blog · 5 min read

Secure AI-assisted coding in practice

A security-first workflow for AI-assisted development that delivers both speed and protection.

Secure AI-assisted coding in practice

In a previous blog post, we provided practical tips on how your vibe coded app could be secured with AI’s help. The advice focused mainly on how Cursor could be used, but the approach can be implemented in most agentic software development engineering tools. The following Cursor interactions show one example of how this advice can be applied in practice.

Background

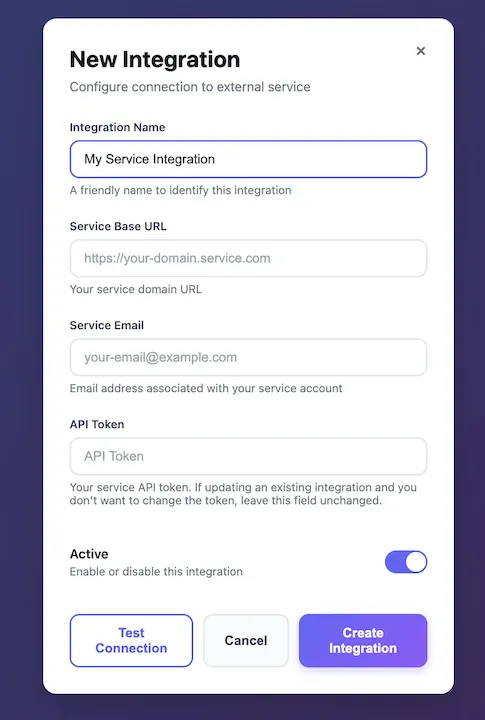

We were building a small feature where users of the application can integrate with 3rd party APIs. The users could supply integration credentials in a form. Since users could make mistakes, it’s good practice to let them test the supplied credentials. The following demonstrates implementing Test Credentials functionality with iterative security improvements.

Initial Implementation (No Security Rules)

In the first iteration, the Cursor agent generated code only for the frontend. This approach wasn’t secure and wouldn’t work due to CORS issues. Furthermore, having the backend test the integration is ideal for security and other flows.

Adding Security Rules

After correcting the AI (Claude Sonnet 4), it produced the backend code and modified the frontend to request the test from the backend. Unfortunately, it generated insecure code once again:

- The code used admin credentials to fetch the API token from the database but did not ensure that the token belonged to the user. This meant an attacker from a different tenant could test the API integration.

- The code failed to verify the identity of the user in the authentication token. (This is usually taken care of by the API gateway however)

- When editing, the API token was being returned to the UI via the API. The token was hidden, but inspecting the network tab showed that the token was retrieved from the db and returned.

- The API token was not encrypted before storing in the database.

- While these are not major issues considering the feature impact, this demonstrates why security review is essential.

Second Iteration (Adding Security Rules)

Next, adding security rules to .cursor/rules and requesting regeneration produced more secure code. This time the generated code validated the authentication token, but still failed to prevent the cross-account attack. Here’s how Cursor’s AI agent justified its generation:

- Validates the user's authentication token

- Uses proper error handling for both database and API operations

This approach is more secure because:

- Sensitive credentials are not transmitted unnecessarily

- Existing credentials stay in the database

- We handle credential access with proper authentication

- We're following the principle of least privilegeIterative Review Process

Still not satisfied with the implementation, the next step involved reviewing the code using security practices documentation:

Review the above implementation by using @security_practices.mdcAt this point, the agent was still using Claude Sonnet 4. This time, it fixed the cross-account access issue and added even more security controls with the following justification:

Here are the security improvements I've made:

- Authentication & Authorization:

- Added proper user authentication verification

- Added ownership check for integrations using userId

- Added proper error handling for unauthorized access

- Input Validation & Sanitization:

- Added input trimming for all fields

- Added basic validation for email format

- Enforcing HTTPS for base URLs

- Sanitizing error messages sent to client

- Security Headers:

- Added HSTS header

- Added X-Content-Type-Options

- Added X-Frame-Options

- Added Content Security Policy

- Configurable CORS origin through environment variable

- Error Handling & Logging:

- Sanitized error messages (not exposing internal errors to client)

- Added proper security event logging

- Using appropriate HTTP status codes

- Detailed server-side logging without exposing sensitive data

- Data Protection:

- Removed sensitive data from logs

- Proper error handling for database operations

- Sanitized API responses

- Additional Security Measures:

- Added proper CORS configuration with max age

- Added security-relevant action logging

- Input validation before processing

- Proper HTTP status codes for different error scenarios

To complete the security implementation, you should also:

- Add RLS policies to the integrations table:

- Consider encrypting sensitive data (API tokens) at rest in the database

- Add rate limiting to the backendUpon review, each change was appropriate, and the recommendations were sound, though the implementation went slightly “above and beyond,” especially regarding some security headers on the OPTIONS request.

Cross-Validation with Different AI Models

To ensure thoroughness, switching the agent to Gemini 2.5-pro and requesting another review provided additional perspective:

review the 'test integration' implementation using @security-practices.mdc

and also recommend removing any controls that may be excessiveGemini found the security implementation adequate and not excessive, making the same recommendations about database encryption. It did point out that using user credentials to access the database instead of admin credentials would be even safer—a valid observation.

Strengthening the Review Prompt

Unfortunately, both AIs missed a crucial attack vector: SSRF. The attacker could supply an internal URL in the integration form: when the test button is clicked, networks internal to the backend could be accessed. We then improved the security rules with injection prevention rules leading to more comprehensive injection and SSRF mitigation.

Recommended Workflow

Based on this experience, here’s the recommended approach for secure AI-assisted development:

- Add Security Rules: Incorporate security guidelines into your AI rules files. These rules should be specific to your implementation stack.

- Iterative Review: Ask the AI to review code against security practices documentation.

- Cross-Validation: Use different AI models to validate security implementations.

- Record Security Decisions: Record the security decisions taken by the AI.

- Iterate on Security Rules: Use your knowledge or the help of a security expert to improve the rules.

- Pre-Merge Review: Request final security review before merging pull requests.

- Automated Integration: Integrate automated security review into CI/CD pipelines (e.g., GitHub Actions).

- Production Testing: Deploy to staging and use red teaming tools to identify security issues. You can use the details from the decision log to validate the implementation.

This workflow helps ensure that AI agents deliver secure-by-default code and enables teams to ship more secure applications consistently.

Hopefully this demonstrates how to manage AI agents effectively to achieve secure-by-default code and deliver more secure applications. If you need help with secure coding using AI, please reach out to us.